In recent weeks, journals published two papers purporting to draw broad cultural inferences from Google’s ngram corpus. The first of these papers, in PLoS One, argued that “language in American books has become increasingly focused on the self and uniqueness” since 1960. The second, in The Journal of Positive Psychology, argued that “moral ideals and virtues have largely waned from the public conversation” in twentieth-century America. Both articles received substantial attention from journalists and blogs; both have been discussed skeptically by linguists and digital humanists. (Mark Liberman’s takes on Language Log are particularly worth reading.)

I’m writing this post because systems of academic review and communication are failing us in cases like this, and we need to step up our game. Tools like Google’s ngram viewer have created new opportunities, but also new methodological pitfalls. Humanists are aware of those pitfalls, but I think we need to work a bit harder to get the word out to journalists, and to disciplines like psychology.

The basic methodological problem in both articles is that researchers have used present-day patterns of association to define a wordlist that they then take as an index of the fortunes of some concept (morality, individualism, etc) over historical time. (In the second study, for instance, words associated with morality were extracted from a thesaurus and crowdsourced using Mechanical Turk.)

The fallacy involved here has little to do with hot-button issues of quantification. A basic premise of historicism is that human experience gets divided up in different ways in different eras. If we crowdsource “leadership” using twenty-first-century reactions on Mechanical Turk, for instance, we’ll probably get words like “visionary” and “professional.” “Loud-voiced” probably won’t be on the list — because that’s just rude. But to Homer, there’s nothing especially noble about working for hire (“professionally”), whereas “the loud-voiced Achilles” is cut out to be a leader of men, since he can be heard over the din of spears beating on shields (Blackwell).

The authors of both articles are dimly aware of this problem, but they imagine that it’s something they can dismiss if they’re just conscientious and careful to choose a good list of words. I don’t blame them; they’re not coming from historical disciplines. But one of the things you learn by working in a historical discipline is that our perspective is often limited by history in ways we are unable to anticipate. So if you want to understand what morality meant in 1900, you have to work to reconstruct that concept; it is not going to be intuitively accessible to you, and it cannot be crowdsourced.

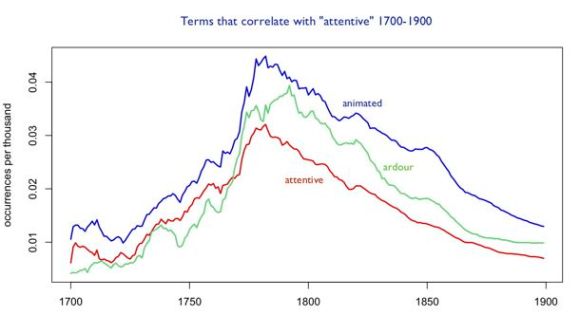

The classic way to reconstruct concepts from the past involves immersing yourself in sources from the period. That’s probably still the best way, but where language is concerned, there are also quantitative techniques that can help. For instance, Ryan Heuser and Long Le-Khac have carried out research on word frequency in the nineteenth-century novel that might superficially look like the psychological articles I am critiquing. (It’s Pamphlet 4 in the Stanford Literary Lab series.) But their work is much more reliable and more interesting, because it begins by mining patterns of association from the period in question. They don’t start from an abstract concept like “individualism” and pick words that might be associated with it. Instead, they find groups of words that are associated with each other, in practice, in nineteenth-century novels, and then trace the history of those groups. In doing so, they find some intriguing patterns that scholars of the nineteenth-century novel are going to need to pay attention to.

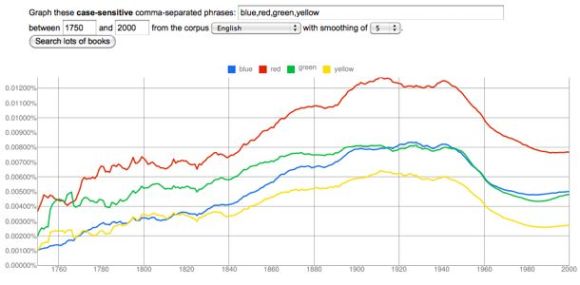

It’s also relevant that Heuser and Le-Khac are working in a corpus that is limited to fiction. One of the problems with the Google ngram corpus is that really we have no idea what genres are represented in it, or how their relative proportions may vary over time. So it’s possible that an apparent decline in the frequency of words for moral values is actually a decline in the frequency of certain genres — say, conduct books, or hagiographic biographies. A decline of that sort would still be telling us something about literary culture; but it might be telling us something different than we initially assume from tracing the decline of a word like “fidelity.”

So please, if you know a psychologist, or journalist, or someone who blogs for The Atlantic: let them know that there is actually an emerging interdisciplinary field developing a methodology to grapple with this sort of evidence. Articles that purport to draw historical conclusions from language need to demonstrate that they have thought about the problems involved. That will require thinking about math, but it also, definitely, requires thinking about dilemmas of historical interpretation.

References

My illustration about “loud-voiced Achilles” is a very old example of the way concepts change over time, drawn via Friedrich Meinecke from Thomas Blackwell, An Enquiry into the Life and Writings of Homer, 1735. The word “professional,” by the way, also illustrates a kind of subtly moralized contemporary vocabulary that Kesebir & Kesebir may be ignoring in their account of the decline of moral virtue. One of the other dilemmas of historical perspective is that we’re in our own blind spot.