It’s only in the last few months that I’ve come to understand how complex search engines actually are. Part of the logic of their success has been to hide the underlying complexity from users. We don’t have to understand how a search engine assigns different statistical weights to different terms; we just keep adding terms until we find what we want. The differences between algorithms (which range widely in complexity, and are based on different assumptions about the relationship between words and documents) never cross our minds.

I’m pretty sure search engines have transformed literary scholarship. There was a time (I can dimly recall) when it was difficult to find new primary sources. You had to browse through a lot of bibliographies looking for an occasional lead. I can also recall the thrill I felt, at some point in the 90s, when I realized that full-text searches in a Chadwyck-Healey database could rapidly produce a much larger number of leads — things relevant to my topic, that no one else seemed to have read. Of course, I wasn’t the only one realizing this. I suspect the wave of challenges to canonical “Romanticism” in the 90s had a lot to do with the fact that Romanticists all realized, around the same time, that we had been looking at the tip of an iceberg.

One could debate whether search engines have exerted, on balance, a positive or negative influence on scholarship. If the old paucity of sources tempted critics to endlessly chew over a small and unrepresentative group of authors, the new abundance may tempt us to treat all works as more or less equivalent — when perhaps they don’t all provide the same kinds of evidence. But no one blames search engines themselves for this, because search isn’t an evidentiary process. It doesn’t claim to prove a thesis. It’s just a heuristic: a technique that helps you find a lead, or get a better grip on a problem, and thus abbreviates the quest for a thesis.

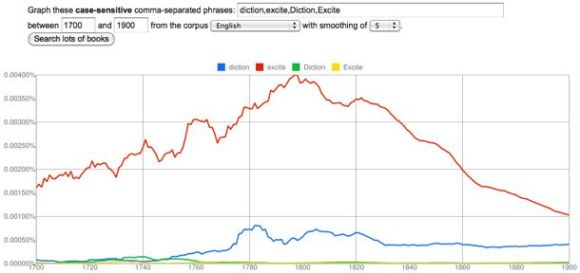

The lesson I would take away from this story is that it’s much easier to transform a discipline when you present a new technique as a heuristic than when you present it as evidence. Of course, common sense tells us that the reverse is true. Heuristics tend to be unimpressive. They’re often quite simple. In fact, that’s the whole point: heuristics abbreviate. They also “don’t really prove anything.” I’m reminded of the chorus of complaints that greeted the Google ngram viewer when it first came out, to the effect that “no one knows what these graphs are supposed to prove.” Perhaps they don’t prove anything. But I find that in practice they’re already guiding my research, by doing a kind of temporal orienteering for me. I might have guessed that “man of fashion” was a buzzword in the late eighteenth century, but I didn’t know that “diction” and “excite” were as well.

What does a miscellaneous collection of facts about different buzzwords prove? Nothing. But my point is that if you actually want to transform a discipline, sometimes it’s a good idea to prove nothing. Give people a new heuristic, and let them decide what to prove. The discipline will be transformed, and it’s quite possible that no one will even realize how it happened.

POSTSCRIPT, May 1, 2011: For a slightly different perspective on this issue, see Ben Schmidt’s distinction between “assisted reading” and “text mining.” My own thinking about this issue was originally shaped by Schmidt’s observations, but on re-reading his post I realize that we’re putting the emphasis in slightly different places. He suggests that digital tools will be most appealing to humanists if they resemble, or facilitate, familiar kinds of textual encounter. While I don’t disagree, I would like to imagine that humanists will turn out in the end to be a little more flexible: I’m emphasizing the “heuristic” nature of both search engines and the ngram viewer in order to suggest that the key to the success of both lies in the way they empower the user. But — as the word “tricked” in the title is meant to imply — empowerment isn’t the same thing as self-determination. To achieve that, we need to reflect self-consciously on the heuristics we use. Which means that we need to realize we’re already doing text mining, and consider building more appropriate tools.

This post was originally titled “Why Search Was the Killer App in Text-Mining.”

Category: 19c

Benjamin Schmidt has been posting some fascinating reflections on different ways of analyzing texts digitally and characterizing the affinities between them.

I’m tempted to briefly comment on a technique of his that I find very promising. This is something that I don’t yet have the tools to put into practice myself, and perhaps I shouldn’t comment until I do. But I’m just finding the technique too intriguing to resist speculating about what might be done with it.

Basically, Schmidt describes a way of mapping the relationships between terms in a particular archive. He starts with a word like “evolution,” identifies texts in his archive that use the word, and then uses tf-idf weighting to identify the other words that, statistically, do most to characterize those texts.

After iterating this process a few times, he has a list of something like 100 terms that are related to “evolution” in the sense that this whole group of terms tends, not just to occur in the same kinds of books, but to be statistically prominent in them. He then uses a range of different clustering algorithms to break this list into subsets. There is, for instance, one group of terms that’s clearly related to social applications of evolution, another that seems to be drawn from anatomy, and so on. Schmidt characterizes this as a process that maps different “discourses.” I’m particularly interested in his decision not to attempt topic modeling in the strict sense, because it echoes my own hesitation about that technique:

In the language of text analysis, of course, I’m drifting towards not discourses, but a simple form of topic modeling. But I’m trying to only submerge myself slowly into that pool, because I don’t know how well fully machine-categorized topics will help researchers who already know their fields. Generally, we’re interested in heavily supervised models on locally chosen groups of texts.

This makes a lot of sense to me. I’m not sure that I would want a tool that performed pure “topic modeling” from the ground up — because in a sense, the better that tool performed, the more it might replicate the implicit processing and clustering of a human reader, and I already have one of those.

Schmidt’s technique is interesting to me because the initial seed word gives it what you might call a bias, as well as a focus. The clusters he produces aren’t necessarily the same clusters that would emerge if you tried to map the latent topics of his whole archive from the ground up. Instead, he’s producing a map of the semantic space surrounding “evolution,” as seen from the perspective of that term. He offers this less as a finished product than as an example of a heuristic that humanists might use for any keyword that interested them, much in the way we’re now accustomed to using simple search strategies. Presumably it would also be possible to move from the semantic clusters he generates to a list of the documents they characterize.

I think this is a great idea, and I would add only that it could be adapted for a number of other purposes. Instead of starting with a particular seed word, you might start with a list of terms that happen to be prominent in a particular period or genre, and then use Schmidt’s technique of clustering based on tf-idf correlations to analyze the list. “Prominence” can be defined in a lot of different ways, but I’m particularly interested in words that display a similar profile of change across time.

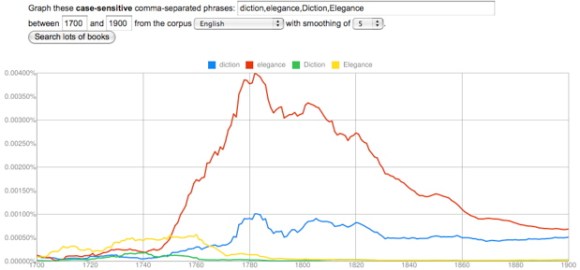

For instance, I think it’s potentially rather illuminating that “diction” and “elegance” change in closely correlated ways in the late eighteenth and early nineteenth century. It’s interesting that they peak at the same time, and I might even be willing to say that the dip they both display, in the radical decade of the 1790s, suggests that they had a similar kind of social significance. But of course there will be dozens of other terms (and perhaps thousands of phrases) that also correlate with this profile of change, and the Google dataset won’t do anything to tell us whether they actually occurred in the same sorts of books. This could be a case of unrelated genres that happened to have emerged at the same time.

But I think a list of chronologically correlated terms could tell you a lot if you then took it to an archive with metadata, where Schmidt’s technique of tf-idf clustering could be used to break the list apart into subsets of terms that actually did occur in the same groups of works. In effect this would be a kind of topic modeling, but it would be topic modeling combined with a filter that selects for a particular kind of historical “topicality” or timeliness. I think this might tell me a lot, for instance, about the social factors shaping the late-eighteenth-century vogue for characterizing writing based on its “diction” — a vogue that, incidentally, has a loose relationship to data mining itself.

I’m not sure whether other humanists would accept this kind of technique as evidence. Schmidt has some shrewd comments on the difference between data mining and assisted reading, and he’s right that humanists are usually going to prefer the latter. Plus, the same “bias” that makes a technique like this useful dispels any illusion that it is a purely objective or self-generating pattern. It’s clearly a tool used to slice an archive from a particular angle, for particular reasons.

But whether I could use it as evidence or not, a technique like this would be heuristically priceless: it would give me a way of identifying topics that peculiarly characterize a period — or perhaps even, as the dip in the 1790s hints, a particular impulse in that period — and I think it would often turn up patterns that are entirely unexpected. It might generate these patterns by looking for correlations between words, but it would then be fairly easy to turn lists of correlated words into lists of works, and investigate those in more traditionally humanistic ways.

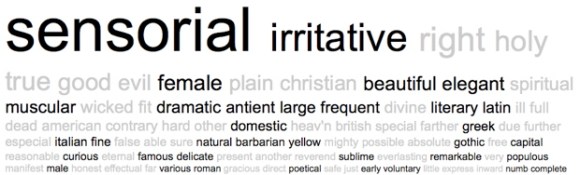

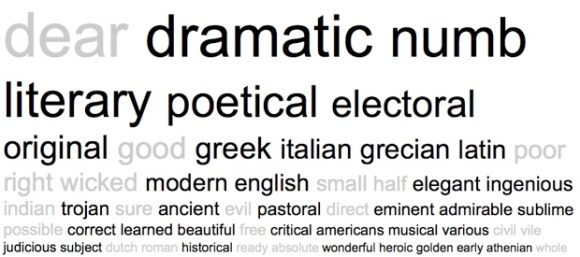

For instance, I had no idea that “diction” would correlate with “elegance” until I stumbled on the connection, but having played around with the terms a bit in MONK, I’m already getting a sense that the terms are related not just through literary criticism (as you might expect), but also through historical discourse and (oddly) discourse about the physiology of sensation. I don’t have a tool yet that can really perform Schmidt’s sort of tf-idf clustering, but just to leave you with a sense of the interesting patterns I’m glimpsing, here’s a word cloud I generated in MONK by contrasting eighteenth-century works that contain “elegance” to the larger reference set of all eighteenth-century works. The cloud is based on Dunning’s log likelihood, and limited to adjectives, frankly, just because they’re easier to interpret at first glance.

There’s a pretty clear contrast here between aesthetic and moral discourse, which is interesting to begin with. But it’s also a bit interesting that the emphasis on aesthetics extends into physiological terms like “sensorial,” “irritative,” and “numb,” and historical terms like “Greek” and “Latin.” Moreover, many of the same terms reoccur if you pursue the same strategy with “diction.”

A lot of words here are predictably literary, but again you see sensory terms like “numb,” and historical ones like “Greek,” “Latin,” and “historical” itself. Once again, moreover, moral discourse is interestingly underrepresented. This is actually just one piece of the larger pattern you might generate if you pursued Schmidt’s clustering strategy — plus, Dunning’s is not the same thing as tf-idf clustering, and the MONK corpus of 1000 eighteenth-century works is smaller than one would wish — but the patterns I’m glimpsing are interesting enough to suggest to me that this general kind of approach could tell me a lot of things I don’t yet know about a period.

In my last post, I argued that groups of related terms that express basic sensory oppositions (wet/dry, hot/cold, red/green/blue/yellow) have a tendency to correlate strongly with each other in the Google dataset. When “wet” goes up in frequency, “dry” tends to go up as well, as if the whole sensory category were somehow becoming more prominent in writing. Primary colors rise and fall as a group as well.

In that post I focused on a group of categories (temperature, color, and wetness) that all seem to become more prominent from 1820 to 1940, and then start to decline. The pattern was so consistent that you might start to wonder whether it’s an artefact of some flaw in the data. Does every adjective go up from 1820 to 1940? Not at all. A lot of them (say, “melancholy”) peak roughly where the ones I’ve been graphing hit a minimum. And it’s possible to find many paired oppositions that correlate like hot/cold or wet/dry, but peak at a different point.

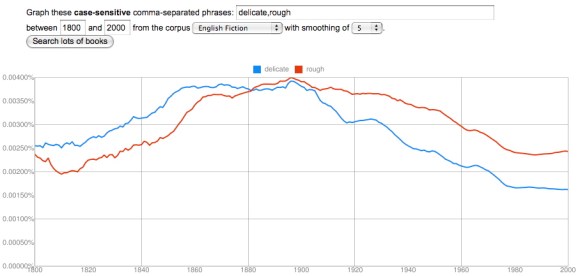

“Delicate” and “rough” correlate loosely (with an interesting lag), but peak much earlier than words for temperature or color, somewhere between 1880 and 1900. Now, it’s fair to question whether “delicate” and “rough” are actually antonyms. Perhaps the opposite of “rough” is actually “smooth”? As we get away from the simplest sensory categories there’s going to be more ambiguity than there was with “wet” and “dry,” and the neat structural parallels I traced in my previous post are going to be harder to find. I think it’s possible, however, that we’ll be able to discover some interesting patterns simply by paying attention to the things that do in practice correlate with each other at different times. The history of diction seems to be characterized by a sequence of long “waves” where different conceptual categories gradually rise to prominence, and then decline.

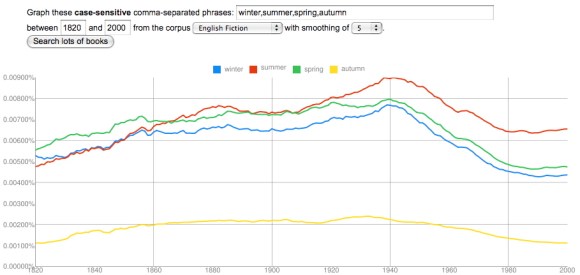

I should credit mmwm at the blog Beyond Rivalry for the clue that led to my next observation, which is that it’s not just certain sensory adjectives (like hot/cold/cool/warm) that rise to prominence from 1820 to 1940, but also a few nouns loosely related to temperature, like the seasons.

I’ve started this graph at 1820 rather than 2000, because the long s/f substitution otherwise creates noise at the very beginning. And I’ve chosen “autumn” rather than “fall” to avoid interference from the verb. But the pattern here is very similar to the pattern I described in my last post — there’s a low around 1820 and a high around 1940. (Looking at the data for fummer and fpring, I suspect that the frequency of all four seasons does increase as you go back before 1820.)

As I factor in some of this evidence, I’m no longer sure it’s adequate to characterize this trend generally as an increase in “concreteness” or “sensory vividness” — although that might be how Ernest Hemingway and D. H. Lawrence themselves would have imagined it. Instead, it may be necessary to describe particular categories that became more prominent in the early 20c (maybe temperature? color?) while others (perhaps delicacy/roughness?) began to decline. Needless to say, this is all extremely tentative; I don’t specialize in modernism, so I’m not going to try to explain what actually happened in the early 20c. We need more context to be confident that these patterns have significance, and I’ll leave the task of explaining their significance to people who know the literature more intimately. I’m just drawing attention to a few interesting patterns, which I hope might provoke speculation.

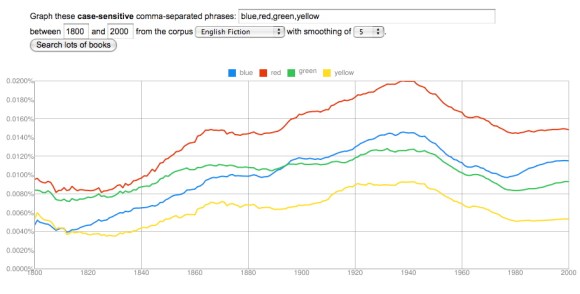

Finally, I should note that all of the changes I’ve graphed here, and in the last post, were based on the English fiction dataset. Some of these correlations are a little less striking in the main English dataset (although some are also more striking). I’m restricting myself to fiction right now to avoid cherry-picking the prettiest graphs.

The rise of a sensory style?

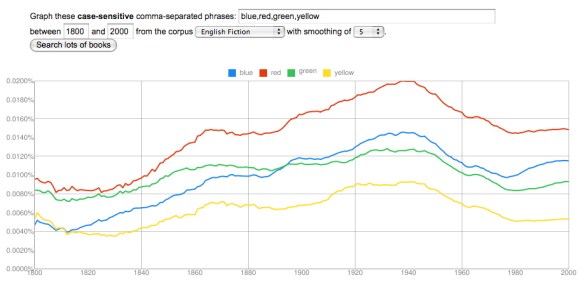

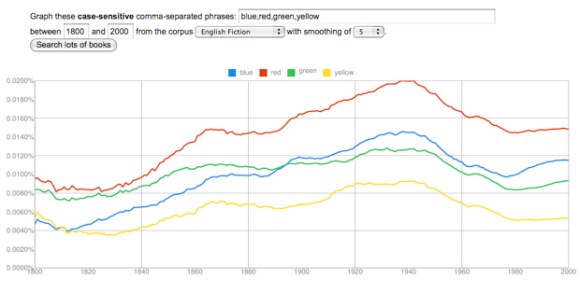

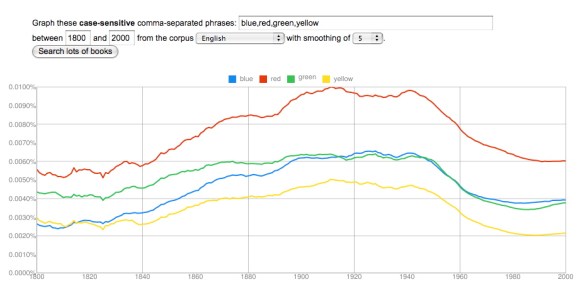

I ended my last post, on colors, by speculating that the best explanation for the rise of color vocabulary from 1820 to 1940 might simply be “a growing insistence on concrete and vivid sensory detail.” Here’s the graph once again to illustrate the shape of the trend.

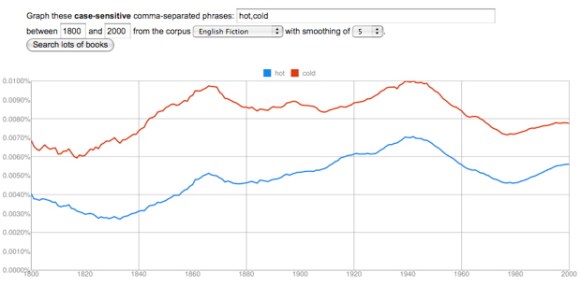

It occurred to me that one might try to confirm this explanation by seeing what happened to other words that describe fairly basic sensory categories. Would words like “hot” and “cold” change in strongly correlated ways, as the names of primary colors did? And if so, would they increase in frequency across the same period from 1820 to 1940?

The results were interesting.

“Hot” and “cold” track each other closely. There is indeed a low around 1820 and a peak around 1940. “Cold” increases by about 60%, “hot” by more than 100%.

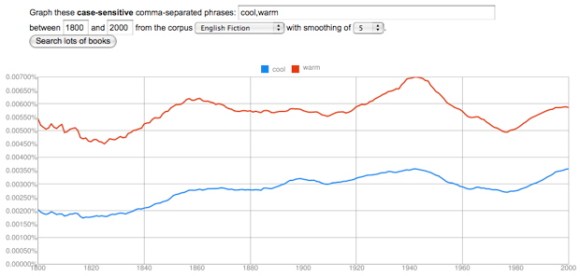

“Warm” and “cool” are also strongly correlated, increasing by more than 50%, with a low around 1820 and a high around 1940 — although “cool” doesn’t decline much from its high, probably because the word acquires an important new meaning related to style.

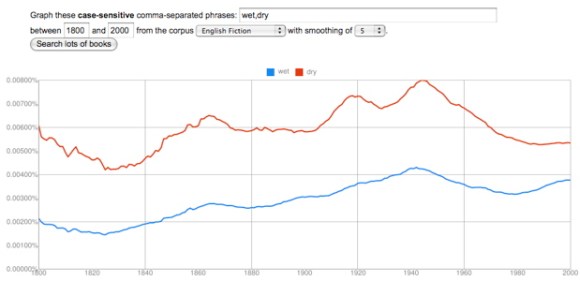

“Wet” and “dry” correlate strongly, and they both double in frequency. Once again, a low around 1820 and a peak around 1940, at which point the trend reverses.

There’s a lot of room for further investigation here. I think I glimpse a loosely similar pattern in words for texture (hard/soft and maybe rough/smooth), but it’s not clear whether the same pattern will hold true for the senses of smell, hearing, or taste.

More crucially, I have absolutely no idea why these curves head up in 1820 and reverse direction in 1940. To answer that question we would need to think harder about the way these kinds of adjectives actually function in specific works of fiction. But it’s beginning to seem likely that the pattern I noticed in color vocabulary is indeed part of a broader trend toward a heightened emphasis on basic sensory adjectives — at least in English fiction. I’m not sure that we literary critics have an adequate name for this yet. “Realism” and “naturalism” can only describe parts of a trend that extends from 1820 to 1940.

More generally, I feel like I’m learning that the words describing different poles or aspects of a fundamental opposition often move up or down as a unit. The whole semantic distinction seems to become more prominent or less so. This doesn’t happen in every case, but it happens too often to be accidental. Somewhere, Claude Lévi-Strauss can feel pretty pleased with himself.

It’s tempting to use the ngram viewer to stage semantic contrasts (efficiency vs. pleasure). It can be more useful to explore cases of semantic replacement (liberty vs. freedom). But a third category of comparison, perhaps even more interesting, involves groups of words that parallel each other quite closely as the whole group increases or decreases in prominence.

One example that is conveniently easy to visualize involves colors.

The trajectories of primary colors parallel each other very closely. They increase in frequency through the nineteenth century, peak in a period between 1900 and 1945, and then decline to a low around 1985, with some signs of recovery. (The recovery is more marked after 2000, but that data may not be reliable yet.) Blue increases most, by a factor of almost three, and green the least, by about 50%. Red and yellow roughly double in frequency.

Perhaps red increases because of red-baiting, and blue increases because jazz singers start to use it metaphorically? Perhaps. But the big picture here is that the relative prominence of different colors remains fairly stable (red being always most prominent), while they increase and decline significantly as a group. This is a bit surprising. Color seems like a basic dimension of human experience, and you wouldn’t expect its importance to fluctuate. (If you graph the numbers one, two, three, for instance, you get fairly flat lines all the way across.)

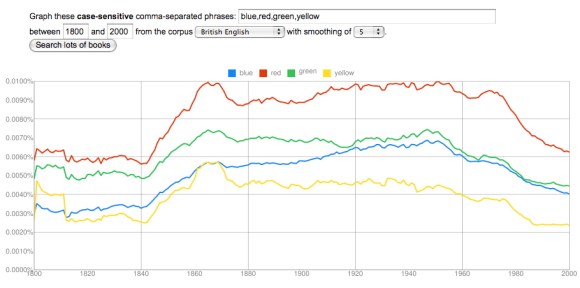

What about technological change? Color photography is really too late to be useful. Maybe synthetic dyes? They start to arrive on the scene in the 1860s, which is also a little late, since the curves really head up around 1840, but it’s conceivable that a consumer culture with a broader range of artefacts brightly differentiated by color might play a role here. If you graph British usage, there’s even an initial peak in the 1860s and 70s that looks plausibly related to the advent of synthetic dye.

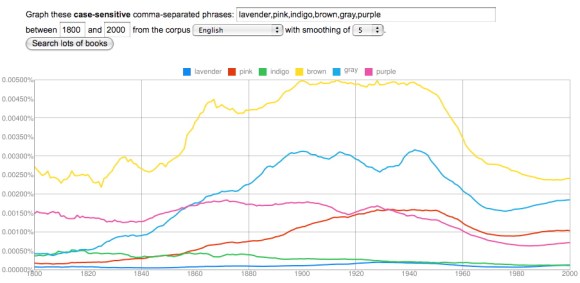

On the other hand, if this is a technological change, it’s a little surprising that it looks so different in different national traditions. (The French and German corpora may not be reliable yet, but at this point their colors behave altogether differently.) Moreover, a hypothesis about synthetic dyes wouldn’t do much to explain the equally significant decline from the 1950s to the 1980s. Maybe the problem is that we’re only looking at primary colors. Perhaps in the twentieth century a broader range of words for secondary colors proliferated, and subtracted from the frequency of words like red and green?

This is a hard hypothesis to test, because there are a lot of different words for color, and you’d need to explore perhaps a hundred before you had a firm answer. But at first glance, it doesn’t seem very helpful, because a lot of words for minor colors exhibit a pattern that closely resembles primary colors. Brown, gray, purple, and pink — the leaders in the graph above — all decline from 1950 to 1980. Even black and white (not graphed here) don’t help very much; they display a similar pattern of increase beginning around 1840 and decrease beginning around 1940, until the 1960s, when the racial meanings of the terms begin to clearly dominate other kinds of variation.

At the moment, I think we’re simply looking at a broad transformation of descriptive style that involves a growing insistence on concrete and vivid sensory detail. One word for this insistence might be “realism.” We ordinarily apply that word to fiction, of course, and it’s worth noting that the increase in color vocabulary does seem to begin slightly earlier in the corpus of fiction — as early perhaps as the 1820s.

But “realism,” “naturalism,” “imagism,” and so on are probably not adequate words for a transformation of diction that covers many different genres and proceeds for more than a century. (It proceeds fairly steadily, although I would really like to understand that plateau from 1860 to 1890.) More work needs to be done to understand this. But the example of color vocabulary already hints, I think, that broadly diachronic studies of diction may turn up literary phenomena that don’t fit easily into literary scholars’ existing grid of periods and genres. We may need to define a few new concepts.