Data mining is troubling for some of the same reasons that social science in general is troubling. It suggests that our actions are legible from a perspective we don’t immediately possess, and reveal things we haven’t consciously chosen to reveal. This asymmetry of knowledge is unsettling even when posed abstractly as a question of privacy. It becomes more concretely worrisome when power is added to the equation. Kieran Healy has written a timely blog post showing how the network analysis that allows us to better understand Boston in the 1770s could also be used as an instrument of social control. The NSA’s programs of secret surveillance are Healy’s immediate target, but it’s not difficult to imagine that corporate data mining could be used in equally troubling ways.

Right now, for reasons of copyright law, humanists mostly mine data about the dead. But if we start teaching students how to do this, it’s very likely that some of them will end up working in corporations or in the government. So it’s reasonable to ask how we propose to deal with the political questions these methods raise.

My own view is that we should resist the temptation to say anything reassuring, because professional expertise can’t actually resolve the underlying political problem. Any reassurance academics might offer will be deceptive.

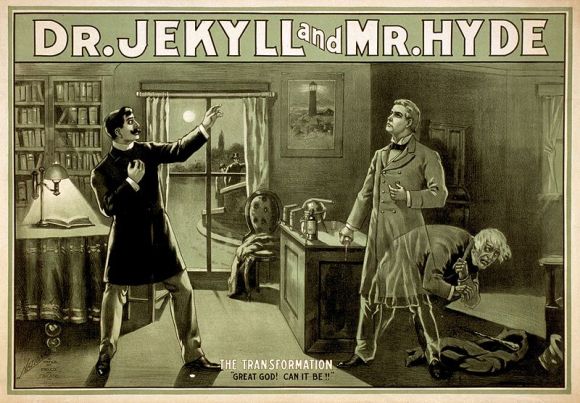

The classic form of this deception is familiar from the opening scenes of a monster movie. “Relax! I can assure you that the serum I have developed will only be used for good.”

For instance: “I admit that in their current form, these methods are problematic. They have the potential to reduce people to metadata in a way that would be complicit with state and corporate power. But we can’t un-invent computers or statistical analysis. So I think humanists need to be actively involved in these emerging discourses as cultural critics. We must apply our humanistic values to create a theoretical framework that will ensure new forms of knowledge get used in cautious, humane, skeptical ways.”

I suspect some version of that statement will be very popular among humanists. It strikes a tone we’re comfortable with, and it implies that there’s an urgent need for our talents. And in fact, there’s nothing wrong with articulating a critical, humanistic perspective on data mining. It’s worth a try.

But if you back up far enough — far enough that you’re standing outside the academy altogether — humanists’ claims about the restraining value of cultural critique sound a lot like “I promise only to use my powers for good.” The naive scientist says “trust me; my professional integrity will ensure that this gets used well.” The naive humanist says “trust me; my powers of skeptical critique will ensure that this gets used well.” I wouldn’t advise the public to trust either of them.

I don’t have a solution to offer, either. Just about everything human beings have invented — from long pointy sticks to mathematics to cultural critique — can be used badly. It’s entirely possible that we could screw things up in a major way, and end up in an authoritarian surveillance state. Mike Konczal suggests we’re already there. I think history has some useful guidance to offer, but ultimately, “making sure we don’t screw this up” is not a problem that can be solved by any form of professional expertise. It’s a political problem — which is to say, it’s up to all of us to solve it.

The case of Edward Snowden may be worth a moment’s thought here. I’m not in a position to decide whether he acted rightly. We don’t have all the facts yet, and even when we have them, it may turn out to be a nasty moral problem without clear answers. What is clear is that Snowden was grappling with exactly the kinds of political questions data mining will raise. He had to ask himself, not just whether the knowledge produced by the NSA was being abused today, but whether it was a kind of knowledge that might structurally invite abuse over a longer historical timeframe. To think that question through you have to know something about the ways societies can change; you have to imagine the perspectives of people outside your immediate environment, and you have to have some skepticism about the distorting effects of your own personal interest.

These are exactly the kinds of reflection that I hope the humanities foster; they have a political value that reaches well beyond data mining in particular. But Snowden’s case is especially instructive because he’s one of the 70% of Americans who don’t have a bachelor’s degree. Wherever he learned to think this way, it wasn’t from a college course in the humanities. Instead he seems to have relied on a vernacular political tradition that told him certain questions ought to be decided by “the public,” and not delegated to professional experts.

Again, I don’t know whether Snowden acted rightly. But in general, I think traditions of democratic governance are a more effective brake on abuses of knowledge than any code of professional ethics. In fact, the notion of “professional ethics” can be a bit counter-productive here since it implies that certain decisions have to be restricted to people with an appropriate sort of training or cultivation. (See Timothy Burke’s related reflections on “the covert imagination.”)

I’m not suggesting that we shouldn’t criticize abuses of statistical knowledge; on the contrary, that’s an important topic, and I expect that many good things will be written about it both by humanists and by statisticians. What I’m saying is that we shouldn’t imagine that our political responsibilities on this topic can ever be subsumed in or delegated to our professional identities. The tension between authoritarian and democratic uses of social knowledge is not a problem that can be resolved by a more chastened or enlightened methodology, or by any form of professional expertise. It requires concrete political action — which is to say, it has to be decided by all of us.

3 replies on “On not trusting people who promise “to use their powers for good.””

Very thoughtful post, Ted. I like the phrase “asymmetry of knowledge” as this is exactly what troubles me. The whole discussion reminds me of my father, who was doing psycholinguistics work in the 1960s and 1970s, some of which involved gauging the accuracy of translations. He was troubled to learn at a conference that the research had been helpful in translating helicopter manuals into Vietnamese.

Another thought: perhaps we do need monster movies starring humanities profs, if only o help is expand the scope of our imagination. Whose Afraid of Virginia Woolf is not quite on point here, though it is a monster movie.

Heh. I can think of lots of movies where humanities profs turn out to be *monsters*. They’re one of the favorite categories of murderers on “Inspector Lewis.”

Verry thoughtful blog