Most of what I’m about to say is directly lifted from articles in corpus linguistics (1, 2), but I don’t think these results have been widely absorbed yet by people working in digital humanities, so I thought it might be worthwhile to share them, while demonstrating their relevance to literary topics.

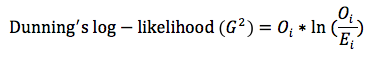

The basic question is just this: if I want to know what words or phrases characterize an author or genre, how do I find out? As Ben Schmidt has shown in an elegantly visual way, simple mathematical operations won’t work. If you compare ratios (dividing word frequencies in the genre A that interests you by the frequencies in a corpus B used as a point of comparison), you’ll get a list of very rare words. But if you compare the absolute magnitude of the difference between frequencies (subtracting B from A), you’ll get a list of very common words. So the standard algorithm that people use is Dunning’s log likelihood,

— a formula that incorporates both absolute magnitude (O is the observed frequency) and a ratio (O/E is the observed frequency divided by the frequency you would expect). For a more complete account of how this is calculated, see Wordhoard.

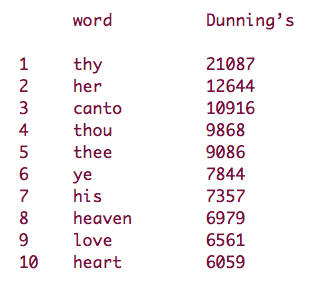

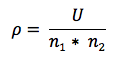

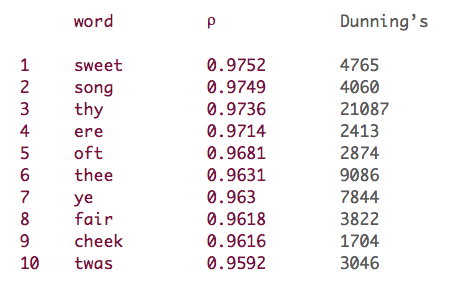

But there’s a problem with this measure, as Adam Kilgarriff has pointed out (1, pp. 237-38, 247-48). A word can be common in a corpus because it’s very common in one or two works. For instance, when I characterize early-nineteenth-century poetic diction (1800-1849) by comparing a corpus of 60 volumes of poetry to a corpus of fiction, drama, and nonfiction prose from the same period (3), I get this list:

Much of this looks like “poetic diction” — but “canto” is poetic diction only in a weird sense. It happens to be very common in a few works of poetry that are divided into cantos (works for instance by Lord Byron and Walter Scott). So when everything is added up, yes, it’s more common in poetry — but it doesn’t broadly characterize the corpus. Similar problems occur for a range of other reasons (proper nouns and pronouns can be extremely common in a restricted context).

The solution Kilgarriff offers is to instead use a Mann-Whitney ranks test. This allows us to assess how consistently a given term is more common in one corpus than in another. For instance, suppose I have eight text samples of equal length. Four of them are poetry, and four are prose. I want to know whether “lamb” is significantly more common in the poetry corpus than in prose. A simple form of the Mann-Whitney test would rank these eight samples by the frequency of “lamb” and then add up their respective ranks:

Since most works of poetry “beat” most works of prose in this ranking, the sum of ranks for poetry is higher, in spite of the 31 occurrences of lamb in one work of prose — which is, let us imagine, a novel about sheep-rustling in the Highlands. But a log-likelihood test would have identified this word as more common in prose.

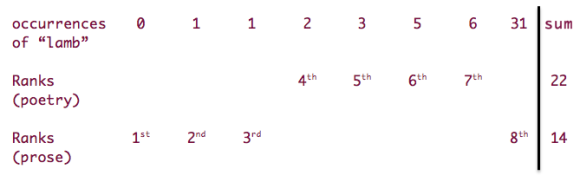

In reality, one never has “equal-sized” documents, but the test is not significantly distorted if one simply replaces absolute frequency with relative frequency (normalized for document size). (If one corpus has on average much smaller documents than the other does, there may admittedly be a slight distortion.) Since the number of documents in each corpus is also going to vary, it’s useful to replace the rank-sum (U) with a statistic ρ (Mann-Whitney rho) that is U, divided by the product of the sizes of the two corpora.

Using this measure of over-representation in a corpus produces a significantly different model of “poetic diction”:

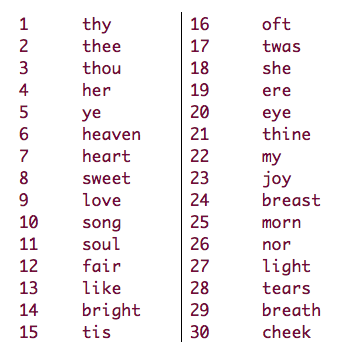

This looks at first glance like a better model. It demotes oddities like “canto,” but also slightly demotes pronouns like “thou” and “his,” which may be very common in some works of poetry but not others. In general, it gives less weight to raw frequency, and more weight to the relative ubiquity of a term in different corpora. Kilgarriff argues that the Mann-Whitney test thereby does a better job of identifying the words that characterize male and female conversation (1, pp. 247-48).

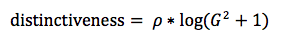

On the other hand, Paul Rayson has argued that by reducing frequency to a rank measure, this approach discards “most of the evidence we have about the distribution of words” (2). For linguists, this poses an interesting, principled dilemma, where two statistically incompatible definitions of “distinctive diction” are pitted against each other. But for a shameless literary hack like myself, it’s no trouble to cut the Gordian knot with an improvised algorithm that combines both measures. For instance, one could multiply rho by the log of Dunning’s log likelihood (represented here as G-squared) …

I don’t yet know how well this algorithm will perform if used for classification or authorship attribution. But it does produce what is for me an entirely convincing portrait of early-nineteenth-century poetic diction:

Of course, once you have an algorithm that convincingly identifies the characteristic diction of a particular genre relative to other publications in the same period, it becomes possible to say how the distinctive diction of a genre is transformed by the passage of time. That’s what I hope to address in my next post.

UPDATE Nov 10, 2011: As I continue to use these tests in different ways (using them e.g. to identify distinctively “fictional” diction, and to compare corpora separated by time) I’m finding the Mann-Whitney ρ measure more and more useful on its own. I think my urge to multiply it by Dunning’s log-likelihood may have been the needless caution of someone who’s using an unfamiliar metric and isn’t sure yet whether it will work unassisted.

References

(1) Adam Kilgarriff, “Comparing Corpora,” International Journal of Corpus Linguistics 6.1 (2001): 97-133.

(2) Paul Rayson, Matrix: A Statistical Method and Software Tool for Linguistic Analysis through Corpus Comparison. Unpublished Ph.D thesis, Lancaster University, 2003, p. 47. Cited in Magali Paquot and Yves Bestgen, “Distinctive words in academic writing: A comparison of three statistical tests for keyword extraction,” Corpora: Pragmatics and Discourse Papers from the 29th International Conference on English Language Research on Computerized Corpora (ICAME 29), Ascona, Switzerland, 14-18 May 2008, p. 254.

(3) The corpora used in this post were selected by Jordan Sellers, mostly from texts available in the Internet Archive, and corrected with a Python script described in this post.

14 replies on “Identifying diction that characterizes an author or genre: why Dunning’s may not be the best method.”

Well, shoot–I was just finally getting around to writing something in response to your comments along these lines, and now you scuttle that with a better method. In terms of indiscriminately multiplying unrelated terms together, I also wonder what happens if you throw IDF into the mix–would Dunning-IDF scores be better than pure Dunning scores for revealing meaningful diction, or maybe worse? It wouldn’t solve your lamb example, but might help with the more general problem of over-representation of the most common words.

What this brings to my mind: As Dunning himself pointed out a couple years ago, one of the big appeals of his test is that it’s extraordinarily simple: I’d have to think about it a bit more, but it seems that Mann-Whitney scores are hard, maybe prohibitively so, to apply to at least my data structure, and maybe to anything resembling it. (I.e., any term-document matrix that’s hundreds of thousands of elements in both dimensions). Am I wrong about this–ie, are your compute times higher for one or the other?

Anyhow, that’s not really an objection, just an explanation for why I might post my thing anyway without taking all this into account.

Definitely post it! I’ll be interested to read it. We should be tossing around a bunch of different possible solutions to any given problem. That was exactly what I liked about your post on Dunnings: it insisted on looking inside the black box and asking what a widely-used algorithm is actually doing for us as humanists.

Plus, I do think there are limitations on the Mann-Whitney method. Calculation time isn’t a big issue, because it’s built into R as wilcox.test(). But I’m not sure how reliable it is with small corpora, and obviously it would become useless with individual texts.

Also, I’m not enough of a statistician to figure out quite how reliable it is when document sizes vary widely. With small documents, and rare words, you could get into a situation where there are a whole lot of zero-to-zero ties in the ranking list, and I’m not sure yet what effect that would have on reliability.

Ok, that post is up. No real solution to the comparison problem…

If you don’t mind me cluttering up your comments section, I’ll just put the a comparison of raw Dunning vs Dunning-IDF scores here, since it’s slightly more germane to this discussion than to mine.

I’m not sure it’s better, but it could certainly go in the mix. (It’s possible what we really want is a tool like Gary King’s topic browser for choosing among different word relevance schemes based on our interests. Basically, the idea there is that TF-IDF is already designed to extract the topically relevant words from a document; we can treat a whole genre as a single document, and that may or may not be better than using Dunning scores.

One thing this does with fiction is pull out characteristic names of fictional characters. (“Jack”,”Dick”,”etc”). I’m not sure if that’s good or bad behavior.

Dunning scores, Straight

[1] “she” “you” “her” “he” “had” “was” “said” “don”

[9] “look” “me” “him” “his” “my” “go” “know” “ll”

[17] “could” “eyes” “up” “little” “but” “ve” “face” “what”

[25] “back” “your” “did” “out” “girl” “think” “would” “like”

[33] “down” “tell” “went” “knew” “get” “Oh” “got” “came”

[41] “thought” “come” “then” “asked” “do” “want” “just” “why”

[49] “moment” “door”

Dunning scores multiplied by IDF to deprecate extremely common words.

[1] “she” “you” “her” “don” “ve” “Oh”

[7] “ain” “ll” “girl” “smile” “didn” “goin”

[13] “laugh” “aunt” “exclaimed” “Monsieur” “yer” “wouldn”

[19] “isn” “git” “wuz” “Miss” “sat” “ye”

[25] “cried” “stared” “couldn” “Dick” “herself” “whispered”

[31] “me” “glance” “Lady” “Madame” “gaze” “eyes”

[37] “Billy” “Jack” “Tom” “boy” “wasn” “got”

[43] “Pierrot” “stood” “uncle” “he” “nodded” “look”

[49] “knew” “em”

Yes, I find that even just using normal Dunnings I often get a lot of character names when I’m working with fiction. There are really two separate problems with Dunnings: the first is that it strongly favors common words (which is probably great if you’re using it to generate a set of criteria for classification or something, but not so great if you’re looking for legible results). But the second problem is that it doesn’t penalize words that are extremely common in a very restricted context — character names and the like.

I imagine multiplying by IDF will address the first of these problems, but actually aggravate the second one, inasmuch as it favors terms with a restricted distribution.

Thanks for the link to Gary King’s topic browser: looks like an ambitious project!

[…] Identifying diction that characterizes an author or genre: why Dunning’s may not be the best meth… […]

[…] texts as individuals vs. lumping them together Ted Underwood has been talking up the advantages of the Mann-Whitney test over Dunning's Log-likelihood, which is currently more widely used. I'm having trouble getting M-W running on large numbers of […]

[…] know and only discovered in someone else’s Facebook update (another aspect of search), but his description on identifying patterns of authorial diction as a Digital Humanities project speaks to the […]

[…] U assesses how consistently a given term appears in one corpus versus another. Here’s a good post about the differences between Dunning’s log-likelihood and Mann-Whitney […]

[…] to a tiny corpus like this is a little questionable… But I want to do it anyway after reading Ted Underwood’s convincing demonstration of it. (For those who aren’t familiar with the test, there’s a nice illustration of how it […]

[…] to a tiny corpus like this is a little questionable… But I want to do it anyway after reading Ted Underwood’s convincing demonstration of it. (For those who aren’t familiar with the test, there’s a nice illustration of how it […]

[…] Ted Underwood, “Identifying diction that characterizes an author or genre: Why Dunning’s may not be the… […]

[…] Underwood, “Identifying diction,” The Stone and the Shell, blog. (9 November […]

[…] “Comparing Corpuses by Word Use,” Sapping Attention, blog. (6 October 2011). Ted Underwood, “Identifying diction,” The Stone and the Shell, blog. (9 November 2011). Eva Portelance, “Prizewinners versus […]

[…] Underwood, “Identifying diction,” The Stone and the Shell, blog. (9 November 2011). […]