It’s only in the last few months that I’ve come to understand how complex search engines actually are. Part of the logic of their success has been to hide the underlying complexity from users. We don’t have to understand how a search engine assigns different statistical weights to different terms; we just keep adding terms until we find what we want. The differences between algorithms (which range widely in complexity, and are based on different assumptions about the relationship between words and documents) never cross our minds.

I’m pretty sure search engines have transformed literary scholarship. There was a time (I can dimly recall) when it was difficult to find new primary sources. You had to browse through a lot of bibliographies looking for an occasional lead. I can also recall the thrill I felt, at some point in the 90s, when I realized that full-text searches in a Chadwyck-Healey database could rapidly produce a much larger number of leads — things relevant to my topic, that no one else seemed to have read. Of course, I wasn’t the only one realizing this. I suspect the wave of challenges to canonical “Romanticism” in the 90s had a lot to do with the fact that Romanticists all realized, around the same time, that we had been looking at the tip of an iceberg.

One could debate whether search engines have exerted, on balance, a positive or negative influence on scholarship. If the old paucity of sources tempted critics to endlessly chew over a small and unrepresentative group of authors, the new abundance may tempt us to treat all works as more or less equivalent — when perhaps they don’t all provide the same kinds of evidence. But no one blames search engines themselves for this, because search isn’t an evidentiary process. It doesn’t claim to prove a thesis. It’s just a heuristic: a technique that helps you find a lead, or get a better grip on a problem, and thus abbreviates the quest for a thesis.

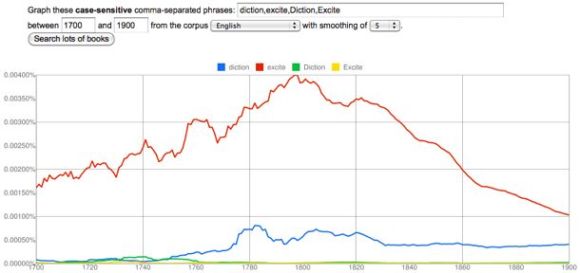

The lesson I would take away from this story is that it’s much easier to transform a discipline when you present a new technique as a heuristic than when you present it as evidence. Of course, common sense tells us that the reverse is true. Heuristics tend to be unimpressive. They’re often quite simple. In fact, that’s the whole point: heuristics abbreviate. They also “don’t really prove anything.” I’m reminded of the chorus of complaints that greeted the Google ngram viewer when it first came out, to the effect that “no one knows what these graphs are supposed to prove.” Perhaps they don’t prove anything. But I find that in practice they’re already guiding my research, by doing a kind of temporal orienteering for me. I might have guessed that “man of fashion” was a buzzword in the late eighteenth century, but I didn’t know that “diction” and “excite” were as well.

What does a miscellaneous collection of facts about different buzzwords prove? Nothing. But my point is that if you actually want to transform a discipline, sometimes it’s a good idea to prove nothing. Give people a new heuristic, and let them decide what to prove. The discipline will be transformed, and it’s quite possible that no one will even realize how it happened.

POSTSCRIPT, May 1, 2011: For a slightly different perspective on this issue, see Ben Schmidt’s distinction between “assisted reading” and “text mining.” My own thinking about this issue was originally shaped by Schmidt’s observations, but on re-reading his post I realize that we’re putting the emphasis in slightly different places. He suggests that digital tools will be most appealing to humanists if they resemble, or facilitate, familiar kinds of textual encounter. While I don’t disagree, I would like to imagine that humanists will turn out in the end to be a little more flexible: I’m emphasizing the “heuristic” nature of both search engines and the ngram viewer in order to suggest that the key to the success of both lies in the way they empower the user. But — as the word “tricked” in the title is meant to imply — empowerment isn’t the same thing as self-determination. To achieve that, we need to reflect self-consciously on the heuristics we use. Which means that we need to realize we’re already doing text mining, and consider building more appropriate tools.

This post was originally titled “Why Search Was the Killer App in Text-Mining.”

Categories

4 replies on “How you were tricked into doing text mining.”

If search is the killer app, do you think people in English acknowledge that? I’ve been mostly worried lately about how historians tend to privately confess to their reliance of search engines, rather than acknowledging openly that they enable so much of modern history.

You’re definitely right about the problem of false equivalence among books being a big issue facing text analysis; I have a fantasy we’ll someday be able to measure it all away with citation analysis or aggregated library records, but that’s probably never going to happen. I think one of the things we need to accept about the heuristics of text mining is that in general it can’t disprove existing readings, which are rather explicitly based on one set of texts and one way of readings. Trying to operationalize those theories to test them with a computer usually involves stripping away so much subtlety that no one can ever land a knockout punch.

Oh, we definitely don’t acknowledge our use of search engines in English. I mean, of course if pressed we would. But as long as no one forces me to say how I found this source, naturally I’m going to leave open the possibility that I just happened to stumble over it at the British Library while looking through a box of manuscripts.

What you say about text mining not having the power to disprove existing readings seems to me exactly right. It’s not that text mining will never have evidentiary force. It will, in many cases. But it’s always going to be weaker on that ground — because, for good or ill, it’s always going to be benchmarked against human judgment. That’s one of the reasons why I’m reluctant to use digital techniques to replicate kinds of interpretation human researchers already perform: it seems to me like a no-win scenario.

[…] Underwood, “How You Were Tricked into Doing Text Mining.” The Stone and the Shell. February 6, […]

Hi nice reading youur post